Introduction to Feature Scaling

- It is the last step in feature transformation.

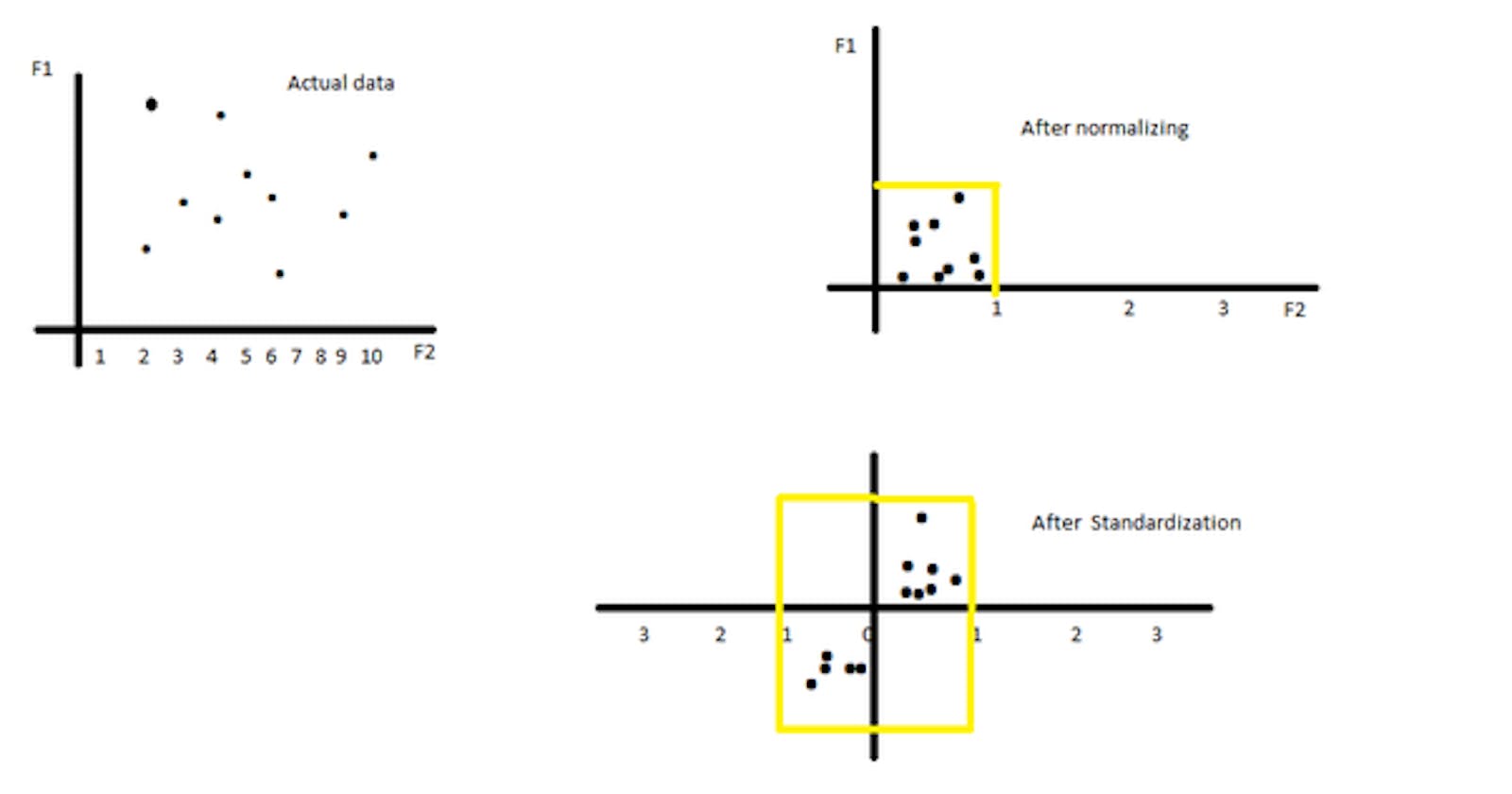

- Feature Scaling is a method to standardize the independent features present in the data to a fixed range

Methods to do Feature Scaling

- There are two methods to do feature scaling

- Standardization & Normalization

Standardization

- It is also called mean centring.

- After applying standardization the value of the mean of the column standardized will be 0 and the standard deviation of the column will be 1.

- This does not have any effect on the shape of the distribution. That is the shape of the distribution will remain the same.

Normalization

- The goal of Normalization is to change the value of numeric columns in the data set to a common scale without distorting the shape of the distribution.

- In normalization, we will change the value of the input columns such that they will always lie between [0,1]

Difference between Standardization and Normalization

- In normalization, we change the values to lie between [0,1] but we cannot guarantee the mean and standard deviation of the data on which we apply normalization. But in Standardization after performing standardization the mean and standard deviation will be o and 1 respectively

Similiarites in Normalization and Standardization

- In both the shape of the distribution which the column is following will remain the same after applying standardization and Normalization in most cases.